Making it ping: Securing Announcements with RPKI a bit faster... (Part 5)

OpenBSD version: 7.1

Arch: Any

NSFP: Uhm...

So… last thing about making things ping was RPKI. Since then, my little project grew ‘a bit’. One thing that growth entails is that I now have a couple of user VMs running on my infrastructure to enable some people doing stupid things with Inter-netting and OpenBSD of their own.

A morning oddity

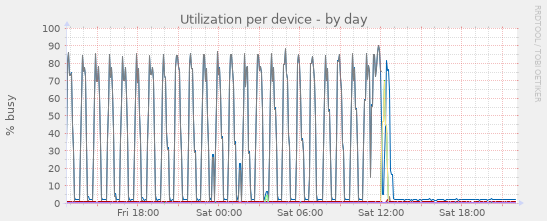

This morning, i noticed that two of my virtualization hosts have been ‘rather busy’ over the past couple of days. At least when looking at the disks (here, an image for one of them):

The odd thing is, that while the disk seems to be loaded preeeeetty well, and that roughly once every hour (for at least half an hour), there seems to be not that much traffic going through the disks…

Finding the culprit

A quick discussion with the person in charge of the VMs creating all that disk-load quickly revealed that they are running OpenBSD as a BGP enabled router… and of course do the right thing running rpki-client once an hour.

Similarly of course, rpki-client should not run for half an hour, constantly hogging the system’s disks.

A look at my own box running rpki-client (and then sharing it to the rest of my AS, which imports it via a small script) shows that rpki-client usually runs in 5-6 minutes:

/usr/sbin/rpki-client 313.63s user 28.96s system 102% cpu 5:33.53 total

On a test-VM i setup in the meantime, though, rpki-client happily took around half an hour (the same as on the user’s VM), while continuously loading the disk with 2-3MB/s of traffic:

/usr/sbin/rpki-client 938.80s user 379.48s system 75% cpu 29:16.84 total

Well, turns out, first downloading a lot of RPKI data, and then validating it, creates a rather specific load pattern, which can be rather nasty (in different ways for different storage).

If we look at the RPKI cache in /var/cache/rpki-client, we are looking at roughly 230k small files spread over a rather large set of directories.

# find /var/cache/rpki-client|wc -l

228153

This combines “the bad” for filesystems (many small files and nested directory structures) with “the bad” for spinning rust based storage (manymany IOPS when RPKI client subsequently opens and closes all these files while validating things) and “the bad” for SSD/NVMe based storage (again, many IOPS with many random writes which quickly churns through the lifetime of your SSD; Yeah, i know, noatime n stuff… yet still.).

It also explains why the whole process is so much faster on the box i run rpki-client on. It has NVMe SSDs.

How to not bother the disk

Of course, it would be nice to not bother whatever storage we have with this. Disks make the process slow (and might drag down our array’s performance), NVMe disks might just die prematurely. Both things we’d like to avoid.

The thing is that the RPKI cache is there for a reason: We do not want to fully grab everything whenever we run the client. Still, we are talking about roughly 550MB of data at the time of writing. Downloading that every once in a while might be ok… say… whenever we reboot the system.

So, the rather obvious solution to our little problem is putting some happy little tre… er… memory file system in place for /var/cache/rpki-client.

So, let’s purge the old cache, and mount a memory files system:

# rm -rf /var/cache/rpki-client/*

# rm -rf /var/cache/rpki-client/.r*

# echo "swap /var/cache/rpki-client mfs rw,-s2G 0 0" >> /etc/fstab

# mount -a

I am going for 2G here, because rpki-client expects at least 512MB of space and 300k inodes to be available.

We could probably have the same result by using -i 2 to adjust our inode density… but i was a bit too lazy to figure that mfs is actually documented in newfs(8)… and hence went for the lazy solution of just using more space.

After going for this change, we can run rpki-client again.

As expected, it is no longer hitting the disk.

Success.

At the same time, we see the disk filling up:

# df -hi

Filesystem Size Used Avail Capacity iused ifree %iused Mounted on

...

mfs:50017 991M 384M 557M 41% 223093 47049 83% /var/cache/rpki-client

Also, we nearly cut the runtime in half, actually making it CPU (not I/O) bound again:

/usr/sbin/rpki-client 857.45s user 123.96s system 100% cpu 16:13.58 total

Great success.

It is still significantly slower than on my own hardware box.

However, rpki-client also seems to have a tendency to be rather CPU bound… after being done with being disk bound… and single threaded.

And this situation is way better than where we were before…